Reshaping the Future of Emotion AI

Inside the Technology Square Research Building (TSRB) in Tech Square, Dr. Noura Howell and her team at the Future Feelings Lab are redefining how we perceive emotions in the digital age. Howell, an Assistant Professor at Georgia Tech with a background in Human-Computer Interaction (HCI) and design research, leads a team that explores the complex relationship between technology, emotion, and society. “The Future Feelings Lab’s mission is to explore alternative ways of feeling and imagination with technology,” she explains. Her work challenges the status quo, asking critical questions about how technology can better understand and respond to human emotions without reducing them to mere data points.

A Space for Imagination and Critique

The Future Feelings Lab is more than just a research hub—it is a space where interdisciplinary collaboration thrives. Howell and her team are particularly interested in the ethical implications of emotional biosensing and Emotion AI—technologies that claim to detect, predict, and even influence human emotions based on data such as facial expressions, physiological signals, and behavioral patterns. However, Howell quickly points out that these technologies are far from neutral.

“Imagination influences the future directions of AI,” she says, “but right now, tech companies are promoting their imagined futures. Why are tech companies the ones to decide the future of AI?” This question lies at the heart of Howell’s work. She believes that the future of AI should not be shaped solely by a handful of tech giants. “Why should we let people like Elon Musk be the ones to decide the direction of AI?” she asks. Instead, Howell advocates for a more inclusive approach: “We need to invite more diverse people to imagine the future of technology.” This ethos is reflected in the lab’s commitment to reflective design. This methodology encourages critical reflection on the assumptions embedded in technology, thereby inviting broader participation in shaping its future.

Emotion AI

One of Howell’s most recent contributions to the field is her 2024 paper, "Reflective Design for Informal Participatory Algorithm Auditing: A Case Study with Emotion AI." This paper explores the ethical and practical challenges of Emotion AI, a rapidly evolving field that utilizes algorithms to predict human emotions based on facial expressions and other biometric data.

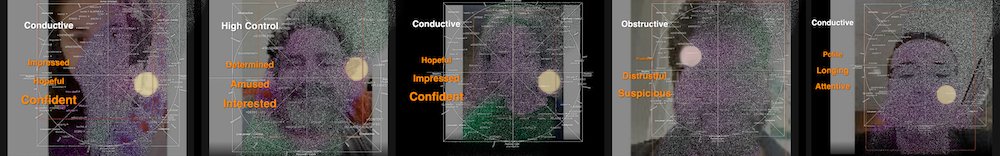

Participants in the study saw how an Emotion AI algorithm predicted their emotions based on images of their faces. This image has been altered to creatively express the sometimes uncomfortable experience of having one's own emotions categorized by AI.

In the study, Howell and her team designed a simple web-based tool that allowed participants to compare their self-reported emotions with predictions made by an Emotion AI system. The goal was to invite critical reflection on the accuracy and ethical implications of Emotion AI. Participants used the probe in their daily lives, logging their emotions and comparing them to the AI’s predictions. The findings revealed significant discrepancies between how people felt and how AI categorized their emotions, highlighting the limitations and potential harm of Emotion AI.

Key takeaways from the study include:

Critiques of Emotion AI: Participants highlighted fundamental flaws in Emotion AI, including the assumption that facial expressions directly correspond to internal emotions. As one participant noted, "People don’t really express emotions on their faces." If I feel happy, it doesn’t show." This critique aligns with Howell’s earlier work, such as her 2018 paper "Emotional Biosensing: Exploring Critical Alternatives," in which she argued that emotional biosensing technologies often oversimplify the complexity of human emotions.

Factors Contributing to Inaccuracy: Participants identified several factors that led to inaccurate predictions, including lighting conditions, wearing glasses, and even makeup. These findings underscore the need for more robust and inclusive training data for Emotion AI systems.

Patterns of Miscategorization: The study also revealed troubling patterns, such as the AI miscategorizing neutral or sad expressions as "disgust" or "suspicion." These errors underscore the technical limitations of Emotion AI and raise ethical concerns about how such systems could stigmatize or misrepresent individuals, particularly in high-stakes contexts such as hiring or security.

From Critique to Design: A Call for Ethical Emotion in AI

Howell’s research not only critiques existing technologies but also offers a roadmap for more ethical and inclusive AI systems. In her 2024 paper, she calls for humility in AI design, urging developers to acknowledge the limitations of Emotion AI and prioritize user agency. For example, she suggests allowing users to challenge or reinterpret the AI’s predictions rather than treating them as definitive truths.

This approach builds on her earlier work, advocating for a shift away from individual-focused, optimization-driven emotional biosensing toward more care-centered and context-aware designs. “We need to design technologies that respect the complexity of human emotions and invite more diverse voices to the table,” Howell emphasizes.

The Future of Emotion AI: A Collaborative Effort

Howell’s work is a call to action for researchers, designers, and policymakers to rethink how we approach Emotion AI. By integrating reflective design principles and participatory methods, her research opens new possibilities for creating technologies that respect the complexity and diversity of human emotions.

As Emotion AI continues to permeate various aspects of our lives—from workplace monitoring to mental health apps—Howell’s research serves as a reminder that technology should serve humanity, not the other way around. “The future of AI is too important to leave to a few powerful voices,” Howell says. “It’s time for all of us to imagine and build a better future together.”